Have academic standards really stagnated?

20th January 2015

In his recent blog post, Sam Freedman asks why, if pupils have become more sensible and happier, we haven’t seen “a commensurate, observable, rise in academic standards?”

As Sam points out, it’s a question we need data and measures to answer. In this blog I ask what information we have available and whether it really does show standards have failed to rise.

“Academic standards” could be indicated by a number of different measures. GCSE performance is of course one of them and the 5 A*-C including English and Maths (5ACEM) benchmark has a number of characteristics in its favour:

1. It covers a broad range of subjects, so could indicate general improvement in academic standards, rather than just in specific topics.

2. It is based on curricula that are taught and learnt in schools, making it a purposeful indicator of the academic standards achieved by our national education system.

3. It is high-stakes, giving both schools and students incentives to perform well.

4. It has known targets and sanctions linked to it which make goals clear to schools and students.

However, I have to agree with Sam that in answering the standards question, national exams’ limitations outweigh their benefits.

- Firstly, they have changed too much over time (this TES piece, for example suggests changes to the exam system led to the 2014 drop in 5ACEM)

-

Secondly, teaching-to-the-test may have undermined academic standards;

-

Thirdly, grade inflation may have made achieving higher grades easier.

These issues present real problems for anyone trying to answer Sam’s question; thus the search for an alternative begins.

Performance in international tests such as PISA, TIMSS and PIRLS are a popular alternative and Sam refers to Professor Robert Coe’s analyses of these to suggest there has not been much change in academic standards between 1995 and 2011.

But are international tests any better than our admittedly flawed national data? Both Freedman and Coe highlight the following problems:

1. They were not designed to evaluate change over time. Because they have a ‘cross-sectional sampling’ design, they test different groups of pupils each time. Differences in test scores from one year to the next therefore can’t always be attributed to changes in academic standards. The test content and data collection method also change year on year, making comparisons tricky.

2. There are difficulties in interpreting and using the data: The tests are designed for cross-country comparisons of standards achieved by pupils – these standards will depend on a whole range of societal and other factors– not just the education system. As such, international tests allow us to draw conclusions about relative country performance but making inferences about countries’ education systems is much harder. Results in PISA are also based on estimates with wide margins of error and this makes it difficult to reach reliable conclusions about year-on-year change.

3. They are low-stakes: This means there aren’t any incentives for schools and teachers to do well in them. On one hand this removes ‘perverse incentives’ but on the other, most pupils and schools prioritise the tests on which their future actually depends and see international tests as a distraction. This may cause problems for the DfE’s plans to use PISA performance as a way of evaluating the impact of exam reform.

In addition to these three problems raised by Freedman and Coe, it is also worth noting that:

1. International tests are subject-specific, so they only tell us about standards in a limited number of subjects.

2. PISA, is a “real-life skills” based test, rather than a curriculum-based one, so doesn’t align with the approach to teaching in English schools.

3. International test results are often reported as average scores, whilst our system has been built around threshold measures like % 5 A*-C. A more direct comparison would look at the proportion of students in England reaching a certain threshold in PISA (say 500 points).

All of these problems make judgments about change in academic standards using international test data difficult.

So if neither international tests nor national tests provide reliable measures of change in academic standards, what can we do?

One option is to combine the two (as Dr. John Jerrim does here with KS3 results; he plans to do the same with GCSEs in the future). Linking National Pupil Database exam data to PISA scores will allow analysts to see how (if at all) the two are connected. For example, one could look at pupils achieving different grades in Maths GCSE and compare these to their PISA scores. Jerrim’s KS3 piece suggests the two types of exams may in fact be quite highly correlated. Over time, one could therefore see whether the average PISA score of pupils achieving specific GCSE grades is increasing, potentially indicating an improvement in academic standards.

Yet even this approach doesn’t solve the big problem of how we disentangle the relative contribution made by improvements in academic standards from other factors, like grade inflation.

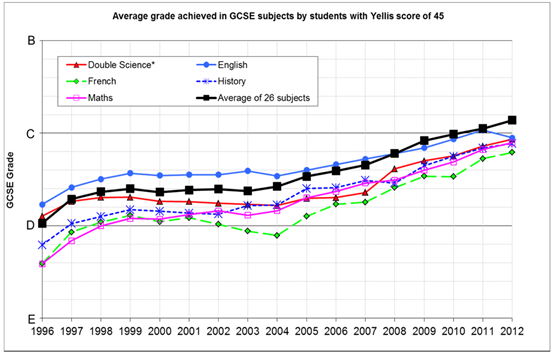

Changes in GCSE grades achieved by candidates with the same maths and vocabulary scores each year

Commenting on the above graph Coe notes:

“Even if some of the rise is a result of slipping grade standards, it is still possible that some of it represents genuine improvement… It is not straightforward to interpret the rise in grades… as grade inflation.”

In other words, even if we accept that some of the rise in results is due to grade inflation (as seems likely) it is impossible to work out how much.

So even with Jerrim’s approach, we cannot draw definitive conclusions about standards since so many factors play a role, including real improvements in teaching and learning, the way assessments are carried out, and their content (the full list of factors Coe identifies can be found here).

Ultimately, to answer the question of whether academic standards really have stagnated, we need to be clear about what we mean by standards, and how we measure them (as Coe explains here). For now, as Sarah found in her analysis of child well-being, when it comes to ‘the standards puzzle’, the safest conclusion to draw is none at all.

This is the second in a series of three LKMco blogs exploring the link between academic standards and well-being, you can read the first blog – White Lightening and Happy Teenagers here

Comments