Six reasons I changed my mind about the reception baseline

4th April 2015

A new “baseline test” will soon be introduced for four year olds in England. From 2016, children will take a short test in the first six weeks of starting school so that everybody knows what their starting point is and so the DfE can have some accurate numbers with which to hold schools to account for kids’ progress.

My initial reaction to the baseline test was “What, there isn’t one already? How do they know what’s going on!?” As a secondary teacher, I have been forced to drink all manner of data-cool-aid; I am used to the idea that my kids (and I) will be judged on how much progress they have made since starting with me. Shouldn’t primary schools and teachers be judged similarly? How can you know whether your teaching is having any effect at all if you don’t know where the kids started? If there have to be accountability measures for primary schools, I’d certainly rather they were based on progress than absolute outcomes (otherwise you’re really just looking at a school’s intake).

However researching forthcoming pieces for the Journal of the College of Teachers and Education Investor led me to question many of my initial assumptions.

I have six main concerns:

1. The DfE have stated that the baseline’s sole purpose is accountability, not providing useful, formative information for teachers, parents or pupils.

I asked them if there were any other intended benefits and they said no – only accountability. This is silly. Of course accountability is important; primary schools receive substantial public money, and are responsible for the development of children in some of their most critical years. However assessment should never happen purely for accountability – it should contribute to pupil learning too.

The Early Years Foundation Stage Profile Handbook got this right: the primary purpose of this assessment was to inform parents, support transition and help teachers plan. Accountability came after these purposes: “In addition, the Department considers that a secondary purpose of the assessment is to provide an accurate national data set…”

2. Tests are required to report a child’s level of attainment on a single, scaled score.

This fails to capture the fact that in the Early Years a range of stages of development at a single point in time are normal, and that these are not necessarily sequential or linear. It is not a case of learning a phoneme followed by a “CAC” word and so on. “Development Matters” explains this and shows young children’s typical development in stages up to twenty months in duration. Whether a child develops the ability to “follow instructions with several ideas and actions” at 40 months or at 60, they are both “typical”. Giving children a single score at one point in time on one scale ignores this crucial point, and wrongly suggests that one is “ahead” of the other.

3. The DfE have also said that the scores must not be age standardized

This is bizarre: un-standardised scores will be unreliable (something that many providers have acknowledged, as they provide age-standardised scores for teachers). When children are only four years old, a few months (or even weeks) constitutes a substantial chunk of their life, making their score much more sensitive to age than later in life (and we know from EPPSE that it has a significant disadvantage even at GCSE). Summer born children will score low, their parents will be unnecessarily worried, and if targets are set for the rest of their academic lives based on this assessment (which, given the way data is used in schools, seems likely) that disadvantage will persist.

4. The baseline assessments change what is assessed in the Early Years.

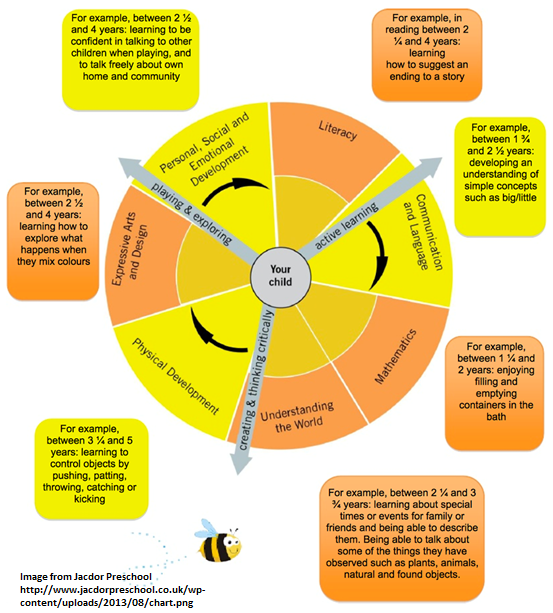

Instead of the wide range of social, emotional, physical and creative outcomes found in the EYFS Profile, the new assessments focus only on language, literacy and mathematics. Professor Coe explained to me that these are the best predictors of attainment in later years, and can be measured more accurately than factors like social skills or creativity. However what isn’t assessed often disappears from the curriculum. There may be benefits to focusing more on literacy and mathematics in the early years if it gives children a solid foundation on which to build more complex skills later. Yet although there is research pointing in both direction, most Early Years educators and Developmental Psychologists seem to come down in favour of a broad curriculum that includes social and emotional development rather than a narrower focus.

5. Six different providers have been approved. Each offering very different tests.

CEM, NFER and SpeechLink’s assessments focus on Maths, Literacy and Language with optional sections on personal, social and emotional development (that are not collected by the DfE for accountability). CEM’s assessment involves a child answering questions on a tablet whilst NFER’s involves practical activities that the child carries out face to face with an adult. Meanwhile, Jan Dubiel, National Development Manager at Early Excellence, explained to me that their assessment is designed so that a child cannot score highly unless they have high scores in particular elements of social and emotional development. Given how much the approved assessments differ, meaningful comparison will surely be impossible.

6. It’s not compulsory.

Given the problems I’ve outlined above, you might think I’d welcome this. But it is in fact problematic. If you don’t use an approved baseline, absolute attainment will be the only performance measure available for your school. If I were Head of a primary with a traditionally high-attaining intake, why would I use a baseline that risks highlighting low progress?

The DfE’s specification for baseline tests departs so dramatically from accepted best practice that I asked them which experts they had consulted. I was directed to the public consultation which reveals that:

- 57% of respondents did not feel that the principles the DfE had outlined would lead to an effective curriculum and assessment system.

- 61% disagreed with the idea of a scaled score, decile ranking and value added measure (the DfE have subsequently abandoned the idea of decile ranking)

- Only 34% agreed with the idea of introducing a baseline assessment in reception.

When I asked if any experts had been involved in any other ways, they replied that I would have to submit a Freedom of Information request to find out.

A response to my request is due in a week… watch this space!

Comments