Causation in Evidence-Based Policymaking and Education: 3 Tools to Help You Think About It More Effectively

by Baz Ramaiah

16th April 2021

This is a story about how a misunderstanding of a simple concept lost taxpayers $10 billion.

In the first part of this blog, I’ll share this story. All of the characters in it are well meaning professionals. They acted out a script that will be familiar to anyone interested in evidence-based policymaking. There’s also a clear moral to the story for best practice in evidence-based policymaking – one which has become even more pertinent in the wake of COVID.

I’ll spend the second part of this blog explaining how this moral can be put into practice. To do this, I’ll introduce three thinking tools for improving planning and prediction of policy effectiveness.

So, let’s begin.

What happened when California tried to reduce their class sizes?

In 1994, California’s fourth grade students were ranked last among 39 states for educational achievement. Policymakers in the state wanted to reduce class sizes to deal with this.

They had ostensibly good evidence to believe this would be an effective policy change. The STAR project, a randomized controlled trial (RCT) in Tennessee in 1985, found that students randomly assigned to smaller classes performed better in tests than their peers in larger classes. This confirmed previous metanalyses.

Further support for the policy came from the public, with families believing that smaller class sizes would lead to more attention for their own child.

So, the policy was implemented at pace, with the first cohort coming into new smaller classes within 12 months. Costs were substantial – $1 billion a year, increasing to $1.7 billion a year after a few years had elapsed.

To the surprise of all involved, academic achievement actually got worse in many schools. Where it did improve, exhaustive and rigorous evaluations found that this had almost nothing to do with the reduction in class size. The policy had been a failure.

What went wrong in California?

The California case study has been subject to several volumes of retrospective analysis. All evaluations seem to arrive at the same conclusion:

The policymakers in question made a terrible misjudgement about causation.

The policymakers read the available evidence on class size-reduction. They even drew on an RCT that had been conducted in the same country. But they concluded:

- That class-size reduction causes the effect of increased academic achievement in Tennessee;

- Therefore, class size reduction will cause increased academic achievement in California.

‘It worked there, so it’ll work here’. It’s a heuristic that we all follow all the time, especially in social policy. But it’s a judgment that depends on a particular understanding of causation. And this particular understanding is very mistaken.

How to think about causation

Let’s imagine that I drop a hammer and a feather out of my window. The hammer will fall much faster than the feather. We could do this pretty much anywhere in the world and we’d get the same result: dropping the hammer and feather at the same time will cause the hammer to hit the ground first.

Now imagine Elon Musk flies me to the moon, hammer and feather in tow. If I were to drop the hammer and feather there, then the lack of air resistance means they’d both fall at the same speed. The causal relationship that held on Earth no longer holds on the Moon.

If you change the surrounding and supporting conditions, then you change the causal relationship. There lies the moral of our California case study: you cannot tell if a policy will cause the same effects in a new setting without understanding the conditions that supported that causal effect in the original setting.

Causation in the time of COVID

This topic has taken on particular relevance during COVID. Researchers and policymakers from across the planet have been drawing on research from previous years to plan interventions, hoping they will achieve the same positive effects as they did in times past. But so many of the causal relationships identified in previous research depend on conditions that may not hold in the radically transformed landscape of a post-COVID world.

How can Policymakers think more clearly about Causation?

So how can policymakers draw on evidence to understand and predict policy effectiveness? In the rest of this blog I’ll use California’s class size reduction policy to explain three thinking tools from Nancy Cartwright and Jeremy Hardie’s brilliant ‘Evidence Based Policy: A Practical Guide to Doing It Better’:

- The Argument Pyramid

- Vertical Search

- Horizontal Search

These simple tools could have been used to motivate better decision making in California in 1994. They can also help optimise policymakers’ decision making now.

The Argument Pyramid

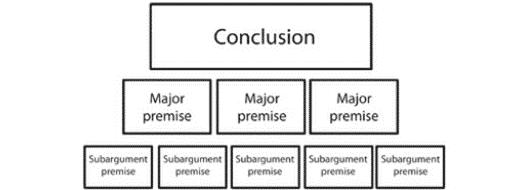

One way of supporting the analytic process of policy development is through the use of Argument Pyramids –

Each box represents a different aspect of our reasoning process around a policy:

- Conclusion – The policymaking decision or recommendation that is an outcome of evidence-gathering and deliberation. It’s placed on the top of the pyramid as it is supported by all the claims underneath.

- Major Premises – These are the main pieces of evidence that support the policy recommendation. These would need to hold if the policy is going to be effective. These could be from original primary research or drawn from the wider literature.

- Subargument Premises – These are supporting claims that the Major premises depend on. They are usually less controversial and more well-established compared to the premises, hence they are closer to the ground.

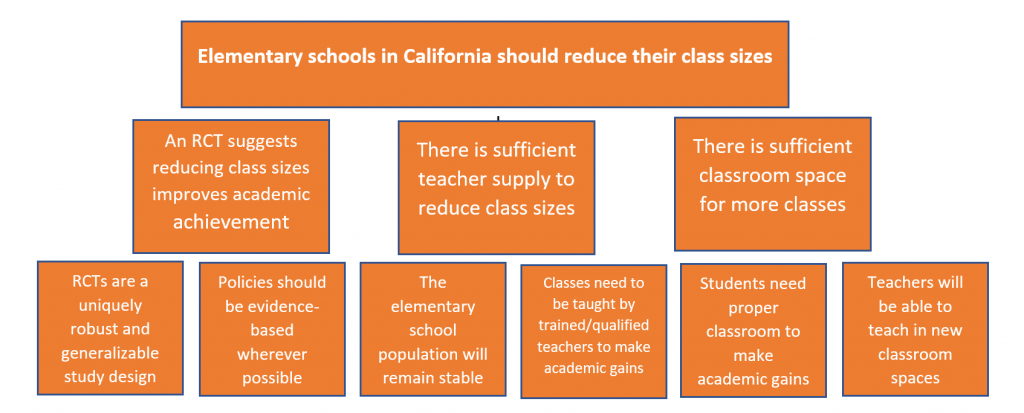

How could an Argument Pyramid have helped policymakers in California?

Let’s populate the Argument Pyramid with the California class-reduction story. From the research, it looks like we’d need to meet the following conditions to justify pursuing the policy –

The Argument Pyramid serves as a constant visual reminder that the success of a particular policy depends on specific conditions. If these conditions no longer hold or prove to not be the case then the policy would collapse into oblivion. Equally, it also acts as an explicit record of these supporting conditions, allowing each one of them to be identified and further researched.

But how do we identify these conditions? And how do we know whether they’ve been met? That brings us to our next two thinking tools…

Vertical Search

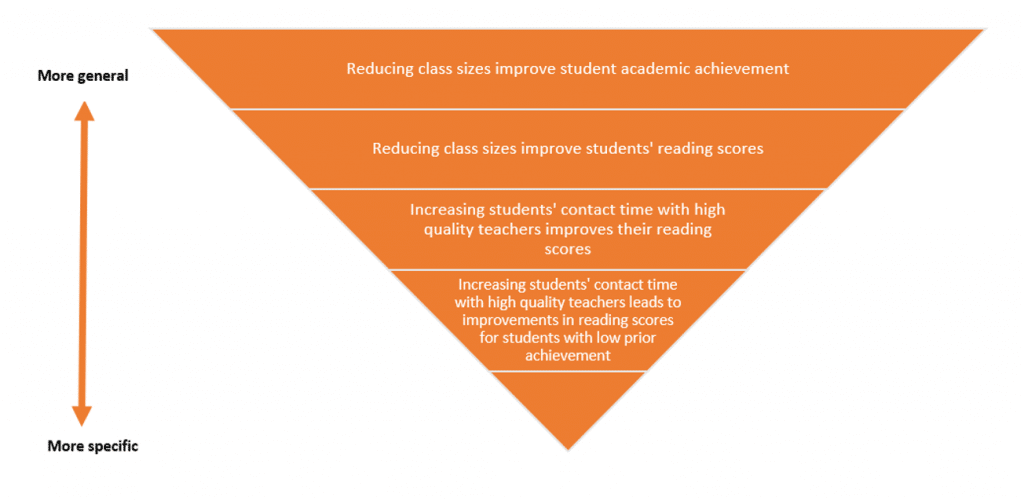

A central plank in the California’s reasoning towards class-size reduction was the STAR RCT conducted in Tennessee. The policymakers decided to give the study’s findings a very general interpretation:

“Reducing class sizes improves students’ academic achievement”

RCTs are typically intended for a very general interpretation. That’s the whole purpose of the random assignment to experimental conditions. By eliminating the role of participant variables in explaining programme effects, you signal that the programme would achieve the same effect with any group of participants.

But it also would have been possible to look at the STAR RCT and pull out a very specific interpretation:

“This study shows that, under a particular set of social, cultural and environmental conditions, increasing students’ contact time with high quality teachers can support improvement in reading scores for students with low prior achievement”

Exploring the conclusions of a study as if they are very general and abstract or very local and specific is what Cartrwight & Hardie call ‘Vertical search’. It’s an essential tool when assembling an evidence base for a potential policy and securing its effectiveness.

How could Vertical Search have helped policymakers in California?

To show this power, let’s see how policymakers could have used Vertical Search in our California case study:

Policymakers could look up and down this diagram, ascending towards more abstract interpretations or descending to more specific ones. In this case, because we’re looking at an RCT where the default interpretation is a general one, we need to descend the ladder to investigate more specific interpretations.

By descending this ladder and picking out these more specific interpretations, we may spot details that point to important conditions that support the causal effect of the programme. For example, that the policy’s effectiveness depended on the deployment of high-quality teachers to support the new smaller classes. Prompted by this more specific interpretation California’s policy makers might have asked themselves: do we have enough high-quality teachers to deliver a class-reduction policy that increases academic achievement?

The answer to this would’ve been resolutely negative. In fact, California had to massively scale up its recruitment, hiring 12,000 extra teachers in the first year of the programme. This would’ve been fine if these teachers were high-quality. However, because of the pace of delivery, many of these teachers were placed in classrooms without completing their training. Many of these new teachers were sent to schools in challenging areas as these communities had the biggest issues with recruiting and retaining more experienced staff. As a consequence, schools in these areas actually had their average academic achievement fall because of the new class size policy.

Horizontal Search

The complement to the Vertical Search is the Horizontal Search. This looks more explicitly at the conditions that supported a particular causal effect in a study. While Vertical Search looks up and down a study finding to see how specifically or generally it should be interpreted, a Horizontal search looks around a finding to see what conditions support that effect.

The process is pretty simple. We scan around a study’s reporting of a causal effect to find out what conditions support it to take place.

How could Horizontal Search have helped policymakers in California?

For example, a Horizontal Search may uncover that the Tennessee trial only involved schools that had enough classroom space to increase the total number of classes in school. This suggests that availability of classroom space might be a crucial supporting condition for class-reduction to effectively cause increased academic achievement. The California’s policy makers can now analyse whether they have enough classroom space to support a massive increase in the total number of classes across the state.

This would’ve quickly highlighted that they did not have enough space. Indeed, the lack of Horizontal Search at the time meant that the class-reduction policy involved moving many of the new smaller classes into spaces designed for music, arts, athletics, special educational needs support and other vital functions supported by schools. These inferior learning environments resulted in reduced academic achievement among these classes, even if they were smaller in size. What’s worse, these reallocations also prevented schools from delivering some of their most valuable extra-curricular and enrichment activities.

It’s clear then that the Horizontal Search could’ve picked up factors that would’ve at least delayed California’s delivery of the class-reduction policy. This would’ve ensured the $10 billion was better spent – whether on a more suitably prepared class-reduction policy or another programme entirely.

Conclusion

Taken together these three tools could have helped California’s Department of Education anticipate that a class-reduction policy would not have the same effects in their setting as it had in others. More importantly, it could have showed them the conditions they would need to get in place to make sure it did have that same effect.

This isn’t just an abstract exercise in retrospective analysis. These thinking tools can be used today by anyone thinking about policymaking to properly understand and plan for how causation works in the real world. The Department for Education in the UK is in the process of delivering big a on summer schools and the much vaunted National Tutoring Programme. All these policies are predicated on research evidence from pre-COVID times. As such, unless the DfE have been cautious in their analysis of causation, they may have set the stage for a pair of ineffective policies.

The California class-reduction story doesn’t have a happy ending. However, with the help of these thinking tools, perhaps the story of the Department for Education’s for COVID recovery plans can.

Comments